There are only three stories in this category and six-seven in the remaining ones so I don’t have to split this post into two parts.

Read the rest of this entry »

Dingo Pictures Works: Fairy Tales

September 22nd, 2017Dingo Pictures Works: Thrillers

September 11th, 2017Today I’m covering the great works from Dingo Pictures. I intend to split the review into roughly the same categories as they are put on the official website and today we start with the first section. Its name is “Krimis” in German which I think is more appropriately translated into “thriller” than “mystery story” or “detective story”.

Read the rest of this entry »

Dingo Pictures: Art Style

September 1st, 2017The prolific German animation studio has made 28 animated films during the 1990s and early 2000s and obviously they’ve managed to make their own unique style. In this post I’ll try to describe it.

Three Problems in Supporting Multimedia Formats

August 31st, 2017As you probably don’t care, I’m working on RealMedia demuxer for NihAV. And it’s very straightforward: chunks without nesting, version field to guard against surprises, clean layout. The only peculiarities it has are audio data interleavers and so-called logical stream (the special entry that describes how to select streams for streaming depending on bitrate available). And yet the implementation for this format in libavformat is quite complex and baffling. This observation led me to playing Captain Obvious and stating these three problems:

- Following specification. Unless it’s ISO/IEC or ITU codec you usually have quite lacking specification either with details omitted (or as DT$ representatives put it, but we have it in the SDK!. Which helps a lot when you can download only ETSI paper). In some case the original implementation is the only specification you have. I’m no stranger to working with binary specifications but it still quite often doesn’t say what to expect in some cases (and then fuzzing happens…);

- Supporting hacks and abuses of specification. Two examples: MP3 and AVI. Or MP3 in AVI. For instance, MP3 has an optional CRC field (so if you don’t want CRC you simply don’t put it there) but I’ve seen samples that put zero checksum instead. Or in AVI you’re supposed to have

42dbchunks for uncompressed video frames,42dcfor compressed video frames and42wbfor audio data. In reality you can havedcanddbidentifiers mixed in the same stream or even0041chunks put insideLIST recchunk (that’s Indeo 4.1 in CivII clips). And of course there are many many more examples that everybody who has encountered them tries to forget. - Seeking. You might wonder how seeking gets here and I’ll tell you how: most formats are not designed for random seeking and even if they are, users would still want to ignore indices, jump to a random position and find a start of the next chunk and timestamp as well. And in

libavformatthat is performed by a binary search that invokes specialread_timestampfunction of the demuxer (if present) which is supposed to do exactly that—searching for packet start and reporting a timestamp.

The moral of the story: if you can allow to ignore stupid user requests, do so and cherish the fact that you can. In NihAV I’m going to implement seeking only for formats that allow that (with reading index) or by more generic linear seeking that skips frames. This should be enough for my needs and it’ll keep code simple too.

Now that it’s become a bit colder I might actually resume my work on NihAV and even more important thing—describing Dingo Pictures art style and works.

A Bit About Legendary German Animation

August 5th, 2017If you talk about German films as a foreigner you might know some good ones like influential Fritz Lang movies (for Frau im Mond they’ve hired Hermann Oberth himself and as the result their depiction of space travel looks much more realistic than modern Hollywood flics) or Gojko Miti? adventure features (the Eastern Westerns). But if you’re not from Germany, what German cartoons do you know? Looks like the only German cartoons that got some widespread action are those from Dingo Pictures.

Dingo Pictures is a company located in Taunus that has produced about thirty Hess(l)ich cartoons in the second half of 1990s. Some of those were completely unique and some were ripped off by D*sney and Don Bluth earlier.

Dingo Pictures has their own unique and easily recognizable style. But before I explain it, here are the eponymous animals in one of their cartoons:

So, Dingo Pictures was a pioneer, combining computer drawn animation with 2D drawn background (watercolours no less!). Also like the anime father, Osamu Tezuka, the company had a cast of actors always appearing in every cartoon.

For example:

The Bear (he often changes scenes and complains about everything)

The Bear (he often changes scenes and complains about everything)

And here’s the star of all Dingo Pictures cartoons, the one and only Wabuu:

Wabuu!

Wabuu!

In case you didn’t know, Wabuu was so popular that he had his own original cartoon, title song (that can be heard in several other cartoons too) and even audio books! Even now the DVD with his own cartoon costs at least twenty Euro on Amazon and about ten Euro used (for comparison, you can buy almost any other used DVD with Dingo Pictures cartoon for one eurocent).

Anyway, we were talking about the style. It’s hard to express in words what makes Dingo Pictures cartoons so charming but I think phrases “record-mending animation quality”, “copy-pasting everything”, “reusing the same scenes in other cartoons”, “more padding that Star Trek The Motionless Picture” and “dull voice acting” would do.

Here are some shots from one of their longer films, King of the Animals (or Lion King for short), don’t mind the quality, I tried to be lazy:

Title card. One of the best ones, honestly.

Title card. One of the best ones, honestly.

Every animal is uniquely redrawn.

Every animal is uniquely redrawn.

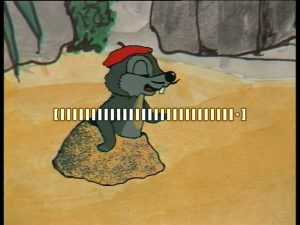

One of moles (as you can guess from its look this mole is Italian).

One of moles (as you can guess from its look this mole is Italian).

The story is very typical: the lion rules the jungle full with monkeys, hippos, crocodiles and vultures (and with hares, squirrels and bears—the bear picture above is from this cartoon). One day a monkey finds diamonds but they decide not to mine them in fear of humans coming. With the birth of his son, the lion king loses interest in ruling and the black panther seizes the power with cheating and false promises and exiles the king. Later, with the help of snake, vultures and bear the panther is defeated. If you think you’ve heard this story elsewhere, don’t worry—it has unexpected twists in it.

And in the end we have scenes like this:

The vultures asked for a computer with phone and modem for their help.

The vultures asked for a computer with phone and modem for their help.

The Black Panther is defeated!

The Black Panther is defeated!

BTW the snake enjoys reading books and quotes Shakespeare, Goethe, Karl Marx and Gorbachov. If you find this strange how could you get past the fact the panther’s name is Bocassa?

How one can not enjoy masterpieces like this! Oh, and every time I hear sirens I remember the duck from Animal Football, that’s how much their art has touched me.

There’s a sequel to it, simply called King of the Animals The Second Part but it’s of lesser quality IMO.

The other noteworthy cartoons are Aladdin(the genie there is a famous actor who is not Robin Williams), Animals Football(there they’ve copy-pasted all their animal characters and then some), Bremen Musicians(it has live narrator filmed, not just animation), The Case for Mouse Police(it simply needs to be seen to be believed) and of course Wabuu.

P.S. For some reason DVDs are distributed by P*wer Channel GmbH and don’t mention the original creator anywhere except in the video. They are that modest.

P.P.S. Honestly, I don’t think I’ve heard about any other German cartoons. But these cartoons have reviews in BaidUTube channels of people from countries like Canada and Sweden (the latter is in Swedish of course, actually Wabuu song sounds even better in Swedish than in German).

#chemicalexperiments — Lasagne

August 5th, 2017Let me start with a bit of history.

Normal don’t care much how to eat their pasta—they simply cook it, add whatever they have (even mayo probably or nothing at all) and eat it. Italians are different, they select pasta sauce first and then decide what pasta will go fine with it. In case of meat sauce (or ragù as locals call it) Italians considered that wide plain pasta would go best with it for some reason. So they competed who can use the wider noodles and the guy who simply took the whole plates won. But it was a bit inconvenient to cook them and then mix with sauce so they’ve switched to oven baking the whole thing in sauce instead. And that’s how lasagne was born (probably; Italians have a completely different story to tell but they always do).

Since I’d better avoid meat entirely, I decided to cook my own version with various components (in several tries too) and here’s my short summary:

- it’s better to use thick sauces;

- tomato sauce is a definite must, it adds flavour;

- cheese sauce is good mostly for the lowest layer (to lay lasagne plates on it) and for pouring on top;

- ricotta and Quark make fine layers too, you can even mix them with some vegetables;

- sliced boiled eggs would make a nice addition to a layer with tomato sauce;

- mozzarella is better avoided since it will result in hard chewy chunks contrasting with the texture of the rest of the dish.

Overall, it’s nice dish, would bake again.

Rust: Optimising Decoder Experience

August 3rd, 2017Okay, I’ve made some changes so hopefully the server will withstand the curiosity of more than two people if it will go like the last time.

So, after implementing Indeo 4/5 decoders for NihAV I nano-benchmarked it and my decoder was about twice as slow compared to libavcodec. And since neither has SIMD optimisations they should be good enough to compare.

The tested file was 00186002.avi — Indeo 4 sample with scalability feature(i.e. luma is split into four bands and uses Haar wavelet to compose the output plane) and duration over ten minutes. The results I got will be given in Linux perf sample counts as those should be representative enough.

avconv — 13.4 seconds, 10K cycles. About 24% spent in luma plane recombination (with Haar wavelet), about 40% of time is taken by bitstream decoding and the rest is mostly transforms and motion compensation.

nihav-tool — 31.6 seconds, 20K cycles. 30% spend in luma plane recombination, 48% of time is taken by bitstream decoding, 11% is for motion compensation and the rest is mostly transforms. Or in samples: recombination — 9900 (against 3300 in libavcodec), bitstream decoding (dirty estimate, it includes some DSP functions inlined) — 15800 against

5600. Motion compensation — 3500 against 1700. Transforms — 1300 against 1500 (they are not equivalent though, my code only transforms the block and output costs are hidden in bitstream decoding). Overall, my code is consistently worse. Is there any way to optimise it a bit?

Read the rest of this entry »

Rust: Not So Great for Codec Implementing

July 31st, 2017Disclaimer: obviously it’s my opinion, feel free to prove me wrong or just ignore.

Now I should qualify for zoidberg (slang name for lowly programmer in Rust who lives somewhere in a dumpster and who is also completely ignored—perfect definition for me) I want to express my thoughts about programming experience with Rust. After all, NihAV was restarted to find out how modern languages fare for my favourite task and there was about one language that was promising enough. So here’s a short rant about the aspects of this programming language that I found good and not so good.

Good things

- Modern language features: standard library containers, generics, units and their visibility etc etc. And at least looks like Rust won’t degrade into metaprogramming language any time soon (that’s left for upcoming Rust+=1 programming language);

- Reasonable encapsulation: I mean both (sub)modules organisation and the fact that functions can be implemented just for some structure;

- Powerful enums that can act both as plain C set of values and also as tagged objects, e.g. the standard

Resultenum has two values—Ok(result)andErr(error)where bothresultanderrorare two different user-defined types, so returned value can contain either while being the same type (Result); - More helpful error messages (e.g. it tries to suggest a correction for mistyped variable name or explains an error a bit more detailed). Sure, Real Programmers™ don’t need that but it’s still nice;

- No need for dependency resolving: you can have stuff in one module referencing stuff in another module and vice versa at the same time, same for no need

- Traits (standard interfaces for objects) and the fact that operations are implemented as specific traits (i.e. if you need to have

a + bwith your custom object you can implementstd::ops::Addfor it and it will work). Also it’s nice to extend functionality of some object by making an implementation for some trait: e.g. my bitstream reader is defined in one place but in another module I made another trait for it for reading codebooks so I can invokelet val = bitread.read_codebook(&cb)?;later.

Unfortunately, it’s not all rosy and peachy, Rust has some things that irritate me. Some of them are following from the advantages (i.e. you pay for many features with compilation time) and other are coming from language design or implementation complexity.

Irritating things that can probably be fixed

- Compilation time is too large IMO. While the similar code in Libav is recompiled in less than a second, NihAV (test configuration) is built in about ten seconds. And any time above five seconds is irritating to wait. I understand why it is so and I hope it will be improved in the future but for now it’s irritating;

- And, on the similar note, benchmarks. While overall built-in testing capabilities in Rust are good (file it under good things too), the fact that benchmarking is available only for

limbonightly Rust is annoying; - No control over allocation. On one hoof I like that I can not worry about it, on the other hoof I’d like to have an ability to handle it.

- Poor primitive types functionality. If you claim that Rust is systems programming language then you should care more about primitive types than just relying on

askeyword. If you care about systems programming and safety you’d have at least one or two functions to convert type into a smaller one (e.g.i16/u16->u8) and/or check whether the result fits. That’s one of the main annoyances when writing codecs: you often have to convert result into byte with range clipping; - Macros system is lacking. It’s great for code but if you want to use macros to have more compact data representation—tough luck. For example, in Indeo3 codebooks have sequences like

(a,b), (-a,-b), (b,a), (-b,-a)which would be nice to shorten with a macro. But the best solution I saw in Rust was to declare whole array in a macro using token tree manipulation for proper submacro expansion. And I fear it might be the similar story with implementing motion compensation functions where macros are used generate required functions for specific block sizes and operations (simple put or average). I’ve managed to work it around a bit in one case with lambdas but it might not work so well for more complex motion compensation functions; - Also the tuple assignments. I’d like to be able to assign multiple variables from a tuple but it’s not possible now. And maybe it would be nice to be able to declare several variables with one

let; - There are many cases where compiler could do the stuff automatically. For example, I can’t take a pointer to

constbut if I declare anotherconstas a pointer to the first one it works fine. In my opinion compiler should be able to generate an intermediate second constant (if needed) by itself. Same for function calling—why doesbitread.seek(bitread.tell() - 42);fail borrow check whilelet pos = bitread.tell() - 42; bitread.seek(pos);doesn’t? - Borrow checker and arrays. Oh, borrow checker and arrays.

This is probably the main showstopper for implementing complex video codecs in Rust effectively. Rust is anti-FORTRAN in a sense that FORTRAN was all about arrays and could operate arrays safely while Rust safely prevents you from operating arrays.

Video codecs usually operate on planes and there you’d like to operate with different chunks of the frame buffer (or plane) at the same time. Rust does not allow you to mutably borrow parts of the same array even when it should be completely safe like let mut a = &mut arr[0..pivot]; let mut b = &mut arr[pivot..];. Don’t tell me about ChunksMut, it does not allow you to work with them both simultaneously. And don’t tell me about Bytes crate—it should not be a separate crate, it should be a core language functionality. In result I have to resort to using indices inside frame buffer and Rc<RefCell<...>> for frames themselves. And only dream about being able to invoke mem::swap(&mut arr[idx1], &arr[idx2]);.

Update: so there’s slice::split_at_mut() which does some of the things I want, thanks Tomas for pointing it out.

And it gets even more annoying when I try to initialise an array of codebooks for further user. The codebook structure does not implement Clone because there’s no good reason for it to be cloned or copied around, but when I initialise an array of them I cannot simply declare it and fill the contents in a loop, I have to resort to unsafe { arr = mem::uninitialized(); for i in 0..arr.len() { ptr::write(&arr[i], Codebook::new(...); } }. I know that if there’s an error creating new element compiler won’t be able to ensure that it drops only already initialised elements but it’s still a problem for compiler not being smart enough yet. Certain somebody had an idea of using generator to initialise arrays but I’m not sure even that will be implemented any time soon.

And speaking about cloning, why does compiler refuse to generate Clone trait for a structure that has a pointer to function?

And that’s why C is still the best language for systems programming—it still lets you to do what you mean (the problem is that most programmers don’t really know what they mean) without many magical incantations. Sure, it’s very good to have many common errors eliminated by design but when you can’t do basic things in a simple way then what it is good for?

Annoying things that cannot be fixed

typekeyword. Since it’s a keyword it can’t be used as a variable name and many objects have type, you know. And it’s not always reasonable to give a longer name or rewrite using enum. Similar story withrefbut I hardly ever need it for a variable name andref_<something>works even better. Still, it would be better if language designers pickedtypedefinstead oftype;- Not being able to combine

if letwith some other condition (those nested conditions tend to accumulate rather fast); - Sometimes I fear that compilation time belongs to this category too.

Overall, Rust is not that bad and I’ll keep developing NihAV using it but keep in mind it’s still far from being perfect (maybe about as far as C but in a different direction).

P.S. I also find the phrase “rewrite in Rust” quite stupid. Rust seems to be significantly different from other languages, especially C, so while “Real Programmers can write FORTRAN program in any language” it’s better to use new language features to redesign interfaces and make new overall design instead of translating the same mistakes from the old code. That’s why NihAV will lurch where somebody might have stepped before but not necessarily using the existing roads.

NihAV — Some News

July 30th, 2017So, despite work, heat, travels, and overall laziness, I’ve managed to complete more or less full-featured Indeo 4 and 5 decoder. That means that my own decoder decodes Indeo 4 and 5 files with all known frame types (including B-frames) and transforms (except DCT because there are no known samples using it) and even transparency!

Here are two random samples from Civilization II and Starship Titanic decoded and dumped as PGM (click for full size):

I’m not going to share the code for transparency plane decoding, it’s very simple (just RLE) and the binary specification is easy to read. The only gotchas are that it’s decoded as contiguous tile aligned to width of 32 (e.g. the first sample has width 332 pixels but the transparency tile is 352 pixels) and the dirty rectangles provided in the band header are just a hint for the end user, not a thing used in decoding.

This decoder was written mostly so that I can understand Indeo better and what can I say about it: Indeo 4/5 is about the same codec with some features fit for more advanced codecs of the later era. While the only things it reuses from the previous frames are pixels and band transform mode, it can reuse decoded quantisers and motion vectors from the first band for chroma bands and luma bands 1-3 in scalability mode too. It has variable block sizes (4×4, 8×8 and 8×8 in 16×16 macroblock) with various selectable transforms and scans (i.e. you can have 2D, row or column Slant, Haar or (theoretically) DCT and scans can be diagonal, horizontal or vertical too). And there were several frame types too: normal I-, P- and B-frames, droppable I- and P-frames, and droppable P-frame sequence (i.e. P-frames that reference the previous frame of such type or normal I/P-frame). Had it had proper stereo support, it’d be still as hot as ITU H.EVC.

The internal design between Indeo 4 and 5 differs in small details, like Indeo 4 having more frame types (like B-frames and droppable I-frames) — but Indeo 5 had introduced droppable P-frame sequence; picture and band headers differ between versions but (macro)block information and actual content decoding is the same (Indeo 5 does a bit trickier stuff with macroblock quantisers but that’s all). Also Indeo 4 had transparency information and different plane reconstruction (using Haar wavelet instead of 5/7 used in Indeo 5). So, in result my decoder was split into several modules reflecting the changes: indeo4.rs and indeo5.rs for codec-specific functions, ivi.rs for common structures and types (e.g. picture header, frame type and such), ividsp.rs for transforms and motion compensation and ivibr.rs for the actual decoding functions.

As with Intel H.263 decoder, Indeo 4/5 decoders provide implementations for IndeoXParser that parse picture header, band header and macroblock information and also recombine back plane in case it was coded as scalable. In result they store not so much information, just the codebooks used in decoding and for Indeo5 the common picture information that is stored only for I-frames (in other words, GOP info).

In result, here’s how Indeo 4 main decoding function looks like:

fn decode(&mut self, pkt: &NAPacket) -> DecoderResult<NAFrameRef> {

let src = pkt.get_buffer();

let mut br = BitReader::new(src.as_slice(), src.len(), BitReaderMode::LE);

let mut ip = Indeo4Parser::new();

let bufinfo = self.dec.decode_frame(&mut ip, &mut br)?;

let mut frm = NAFrame::new_from_pkt(pkt, self.info.clone(), bufinfo);

frm.set_keyframe(self.dec.is_intra());

frm.set_frame_type(self.dec.get_frame_type());

Ok(Rc::new(RefCell::new(frm)))

}

with the actual interface for parser being

pub trait IndeoXParser {

fn decode_picture_header(&mut self, br: &mut BitReader) -> DecoderResult<PictureHeader>;

fn decode_band_header(&mut self, br: &mut BitReader, pic_hdr: &PictureHeader, plane: usize, band: usize) -> DecoderResult<BandHeader>;

fn decode_mb_info(&mut self, br: &mut BitReader, pic_hdr: &PictureHeader, band_hdr: &BandHeader, tile: &mut IVITile, ref_tile: Option<Ref<IVITile>>, mv_scale: u8) -> DecoderResult<()>;

fn recombine_plane(&mut self, src: &[i16], sstride: usize, dst: &mut [u8], dstride: usize, w: usize, h: usize);

}

And the nano-benchmarks:

the longest Indeo4 file I have around (00186002.avi) — nihav-tool 20sec, avconv 9sec plus lots of error messages;

Mask of Eternity opening (Indeo 5) — nihav-tool 8.1sec, avconv 4.1sec.

Return to Krondor intro (Indeo 5) — nihav-tool 5.8sec, avconv 2.9sec.

For other files it’s also consistently about two times slower but whatever, I was not trying to make it fast, I tried to make it work.

The next post should be either about the things that irritate me in Rust and make it not so good for codec implementing or about cooking.

A Bit about Airlines

July 25th, 2017I did not want to have personal rants in my restarted blog but sometimes material just comes and presents itself.

As some of you might know, I prefer travelling by rail; yet sometimes I travel by plane because it’s faster. Most of those flights are semiannual flights to Sweden and an occasional flight to elenril-city. And here’s the list of unpleasant things I had with flights:

- Planes being late for more than an hour (because of technical reasons) — two Lufthansa flights from Arlanda;

- Plane being late just because — Aerosvit, once;

- Baggage not loaded on plane — Cimber Sterling (aka Danish Aerosvit);

- Flights cancelled because of strike — once SAS and once Lufthansa;

- Flight being cancelled because of plane malfunction — Lufthansa once;

- Flight being cancelled because they didn’t want to wait for the passengers — Lufthansa once (yup, people were waiting at the gate but they decided to skip boarding entirely and send the plane away without passengers);

- Flight where I could not check in — Lufthansa once.

To repeat myself, most flights I make during the year are with SAS to/from Sweden though sometimes segment is operated by LH. So far return trips to Frankfurt with LH were mostly okay except for some delays but the last “flight” was something different.

I booked a flight FRA-PRG-FRA. The flight to Praha was cancelled because plane arrived to Frankfurt at least half an hour later than expected and after another hour it was decided it’s not good enough to fly again. Okay. So they could not find a replacement plane and rebooked me to flight at 22:15. Fine, but it turned out that I could reach Praha by train faster and cheaper (twice as cheap actually) so I decided not to wait.

Then the time for return flight came and I could not check in at all because they have modified something (that’s the message: “Cannot check in to your flight because of modifications, refer to Lufthansa counter.” And there’s no LH representative there. And if you can withstand their call centre, you’re a much better person than I am). So it was another train back to Germany (which also broke down in the middle of nowhere but at least it was resolved in an hour and a half). Maybe it’s because of the selected Cattle Lowcostish fare (Economy but without check-in baggage or seat selection) instead of the usual one but at least with SAS when I wasn’t able to take flight to Arlanda (because of Frankfurt Airport staff strike) I still had no problems flying back from there.

Call me picky (and I shan’t argue, I am picky) but I expect better statistics because the most irritating cases were happening with the certain company that I don’t fly with often and that’s comparable with SAS in quantity (but, sadly, not quality).

And that means I’ll avoid using it in the future even if that means not being able to get to some places by plane in reasonable time. There are still trains for me.

P.S. This rant is just to vent off my anger and frustration from the recent experience. And it should make me remember not to take Air Allemagne flights ever again.

P.P.S. Hopefully the next post will be more technical.

Goldie (named so after the Austrian book Bambi) and Wuschel (the squirrel), Ringo the Hare is not pictured here

Goldie (named so after the Austrian book Bambi) and Wuschel (the squirrel), Ringo the Hare is not pictured here The titular king.

The titular king. Nice backgrounds.

Nice backgrounds.