This is the penultimate post in the series (there shall be another post, on how to use the encoder—but if there’s no interest I can simply skip it making this the last post in the series). As promised before, here I’ll present the layout and the details of my encoder.

(more…)

Archive for the ‘NihAV’ Category

VP6 encoder design

Saturday, October 2nd, 2021Is VP8 a Duck codec?

Friday, October 1st, 2021There’s a blog out there with posts dedicated to the history of On2 (née Duck). And one particular post (archived version) brought an unsettling thought that refuses to leave me. Does VP8 belong to Duck or Baidu (yes, I’ll keep calling this company by value) codecs?

Arguments for Duck theory:

- it was released in 2008, before acquisition (which happened in 2010);

- it can be seen as an improvement of VP7, which is definitely a Duck codec;

- its documentation is as lacking as for the previous codecs.

Arguments for Baidu theory:

- it became famous after the company was bought and the codec was open-sourced;

- as a follow-up from the previous item, there is an open-source library for decoding and encoding it (I think the previous source dump had an encoder just for TMRT and maybe it was an oversight);

- it has its own ecosystem (all previous codecs were stored in AVI, this one uses WebMKV);

- I don’t have to implement it in

NihAV(because I wantednihav_duckcrate to contain decoders for all Duck formats and if VP8 is not really a Duck codec I don’t have to do anything).

So, what do you think?

VP6 — rate control and rate-distortion optimisation

Thursday, September 30th, 2021First of all, I want to warn you that “optimisation” part of RDO comes from mathematics with its meaning being selecting an element which satisfies certain criteria the best. Normally we talk about optimisation as a way for code to run faster but the term has more general meaning and here’s one of such cases.

Anyway, while there is a lot of theory behind it, the concepts are quite simple (see this description from a RAD guy for a short concise explanation). To put it oversimplified, rate control is the part of an encoder that makes it output stream with the certain parameters (i.e. certain average bitrate, limited maximum frame size and such) and RDO is a way to adjust encoded stream by deciding how much you want to trade bits for quality in this particular case.

For example, if you want to decide which kind of macroblock you want to encode (intra or several kinds of inter) you calculate how much the coded blocks differ from the original one (that’s distortion) and add the cost of coding those blocks (aka rate) multiplied by lambda (which is our weight parameter that tells how much to prefer rate over distortion or vice versa). So you want to increase bitrate? Decrease lambda so fidelity matters more. You want to decrease frame size? Increase lambda so bits are more important. From mathematical point of view the problem is solved, from implementation point of view that’s where the actual problems start.

(more…)

VP6 encoder: done!

Wednesday, September 29th, 2021Today I’ve finished work on my VP6 encoder for NihAV and it seems to work as expected (which means poorly but what else to expect from a failure). Unfortunately even if the encoder is complete from my point of view, there are still some things to do: write a couple of posts on rate control/RDO and the overall design of my encoder and make it more useful for the people brave enough to use it in e.g. Red Alert game series modding. That means adding some input format support useful for the encoder (I’ve hacked Y4M input support but if there’s a request for a lossless codec in AVI, I can implement that too) and write a page describing how to use nihav-encoder to encode content in VP6 format (AVI only, maybe I’ll add FLV later as a joke but FLV decoding support should come first).

And now I’d like to talk about what features my encoder has and why it lacks in some areas.

First, what it has:

- all macroblock types are supported (including 4MV and those referencing golden frame);

- custom models updated per frame;

- Huffman encoding mode;

- proper quarterpel motion estimation;

- extremely simple golden frame selection;

- sophisticated macroblock type selection process;

- rudimentary rate control.

In other words, it can encode a stream having all but just a couple of features and with the varying quality as well.

And what it doesn’t have:

- interlacing! It should not be that hard to add but my principles say no to supporting it at all (except in some decoders where it can’t be avoided);

- alpha support—it’s rather easy to add but there’s little use for it;

- custom scan order—it’s not likely to give a significant gain while it’s quite hairy to implement properly (it’s not that complex per se but it’ll need a lot of debugging to get it right because of its internal representation);

- advanced profile features like bicubic interpolation filters and selecting parameters for it (again, too much work too little fun);

- context-dependent macroblock size approximations (i.e. calculate expected size using the information about already selected preceding macroblocks instead of fixed guesstimates);

- better macroblock and frame size approximations in general (more about it in the upcoming post);

- better golden frame selection (I don’t even know what would be a good condition for that);

- dynamic intra frame selection (i.e. code a frame as I-frame where it’s appropriate instead of each N-th frame);

- proper rate control (this should be discussed in the upcoming post).

This is an example of progressive approach to the development (in the same sense as progressive JPEG coding): first you implement rough approximation of what you want to have and keep on expanding and improving various features until some arbitrary limit is reached. A lot of the features that I’ve not implemented properly need a lot of time (and sometimes significant domain-specific knowledge) for a proper implementation so I simply stopped where it was either working good enough or it would be not fun to continue.

So, with the next couple of posts on still not covered details (RDO+rate control and overall design) the journey should be complete. Remember, it’s the best opensource VP6 encoder (for the lack of competition) and since I’ve managed to make something resembling an encoder, maybe you can write something even better?

How to perform fast motion search

Saturday, September 25th, 2021To answer the obvious question with the obvious answer, brute force searching for a decent motion vector takes insanely large time. For example, VP6 motion search area be up to 63×63 pixels and checking all possible positions there requires a lot of tries. And if you remember that VP6 has quarterpel motion compensation precision, you should multiply that number by 16 possible sub-pixel positions. Obviously in order to reduce the number of tries various tricks are employed.

While by itself fast motion search methods I describe here are not that complex, it was rather hard to locate books where such details of developing video encoders are presented. At last I’ve found two or three books with the chapters dedicated to motion compensation plus the papers referenced there. The results of this mini-research are given below.

(more…)

VP6 — simple intraframe encoder, part 1

Sunday, September 5th, 2021I admit that I haven’t spent much time on writing encoder but I still have some progress to report.

(more…)

Starting work on VP6 encoder

Thursday, August 26th, 2021It is no secret (not even to me) that I suck at writing encoders. But with NihAV being developed exactly for trying new things and concepts, why not go ahead and try writing an encoder? It is not for having an encoder per se but rather a way to learn how things work (the best way to learn things is to try them yourself after all).

There are several reasons why I picked VP6:

- it is complex enough to have different concept of encoding to try on it;

- in the same time it’s not that complex (just DCT, MC, bool coder and no B-frames, complex block partitioning or complex context-adaptive coding);

- there’s no opensource encoders for it;

- there’s a decoder for it in

NihAValready; - this is not a toy format so it may be of some use for me later.

Of course I’m aware of other attempts to bring us an opensource VP6 encoder and that they all failed, but nothing prevents me from failing at it myself and documenting my path so others might fail at it faster and better.

Speaking of documenting, here’s a roadmap of things I want to play with (or played with already) and report how it went:

- DCT;

- bool coder;

- simple intraframe coding;

- motion estimation (including fast search and subpixel precision);

- rate distortion optimisation;

- rate control.

Hopefully the post about DCT will come tomorrow.

P.S. Why I declare this in public? So that I won’t chicken out immediately.

Playing with trellis and encoding

Sunday, August 8th, 2021I said before I want to play with encoding algorithms within NihAV and here’s another step (a previous major step was vector quantisation and a simple Cinepak encoder using it). Now it’s time for trellis search for encoding.

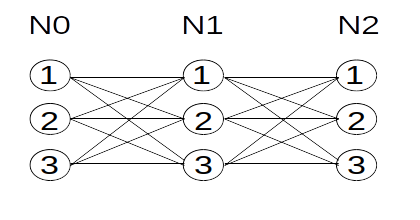

The idea by itself is very simple: you want to encode a sequence optimally (or decode transmitted sequence with distortions), so you represent your data as a set of possible states for each sample and search a path from one state to another with the minimum error. Since each state of the sample is connected with all states of the previous samples, its graph looks like a trellis:

The search itself is performed by selecting for each state a transition from a previous state that gives minimal error, then selecting a state with the least error for the last sample and tracing back the path that lead to it from the beginning. You just need to store the pointer to the previous state, error value and whatever decoder state you require.

I’ve chosen IMA ADPCM encoder as a test playground since it’s simple but useful. The idea of the format is very simple: you have a state consisting of current sample value and step size used as a multiplier for the transmitted 4-bit difference value; you reconstruct the difference, add it to the previous stored value, and correct step size (small delta—decrease step, large delta—increase step). You have 16 possible states for each sample which makes the search take not so long time.

There’s another tricky question of selecting initial step size (it will adapt to the samples but you need to start with something). I select it to be close to the difference between first and second samples and actually abuse first state to store not the index of the previous state but rather a step index. This way I start with (ideally) 16 different step sizes around the current one and can use the one that gives slightly lower error in the end.

And another fun fact: this way I can use just the code for decompression of single ADPCM sample and I don’t require actual code for compression—it traverses through all possible compressed codes already.

I hope this demonstrates that it’s an easy method that improves quality significantly (I have not conducted proper testing but a from a quick test it reduced mean squared error for me by 10-20%).

It should also come in handy for video compression but unfortunately rate distortion optimisation does not look that easy…

NihAV: feature complete

Sunday, June 13th, 2021A year ago I wrote a post about NihAV being conceptually done, now it’s even closer to perfection since I’ve finished writing a useful video player.

Writing it (and especially debugging it) was surprisingly hard so in most cases after looking at it I thought that I’d rather do something else—RE some game format, work on deflate support, just anything but that. But at last it’s done and working adequately. The previous player could just play video until it’s over (or deadlock occasionally), this one supports pausing and seeking plus it seems not to deadlock.

Conceptually it’s very simple as any other player concept, it’s just various features and limitations complicate the design. You simply open input, read packets, discard them or decode with a corresponding decoder and present the result (draw the frame or send audio to the sound card). Now what to do in order to make it offer all the features I wanted from it?

First, interactivity and low(er) response latency. The latter is easy to achieve, you just put audio and video decoding into separate threads so the main loop just does the demuxing and checking for user commands. So now you have user commands that might affect decoding threads (for example, seeking or going to play the next file). I implemented it by setting a special flag that decoding thread checks and discards all queued input packets until some command is received.

Another fun thing was volume control. In my audio player I simply queue samples and let SDL deal with them, here I switched to callback and fill the requested buffer with samples adjusted by current volume. This way you have volume change applied almost immediately instead of it taking effect in a second or more depending in audio queue fill.

And speaking about queues, that was also a fun thing to manage. In a default implementation (demux-send-repeat) you either end up sending all packets before the decoding thread processes them all (and this will consume a lot of memory) or you block on sending via a limited message channel (which either requires a separate thread with more complicated interaction between them all or you can forget about interactivity). The answer is obviously to keep check of how full the channel is and do not demux new packets unless you’re sure you can send them. I keep a queue for the packets or events that should be sent but there’s no space in communication channel for that yet. Additionally I make audio part report the current buffer fill so I know there’s no reason to send more packets yet. Similarly for video I keep a queue of ready to display frames (in SDL textures, so drawing them is just one SDL blit call).

Overall, nothing particularly tricky but debugging it was still not fun. I actually ended up adding a compile-time debugging feature that will dump a lot of internal playback information into debug.log so I can figure out what actually happened there (i.e. NIHing a logger but you should not be surprised by that).

Of course it still lacks a lot of features for a serious player like proper synchronisation (with automatic framedropping and audio underrun corrections), more features (like playback at different speed, taking screenshots and switching between different input streams) and GUI. But I’m fine with the current state of my player and maybe enhance it later if the need arises.

Fun thing is that my player seemed to stall on MP4s. As it turned out, the problem was in MP4 demuxer producing packets in interleaved order (one for audio stream, one for video stream, one for audio stream again, one for video stream…) while it should’ve output packets for various streams for more or less the same time position (which means usually two AAC frames between video frames instead of just one). After the change my player works as expected on MP4s as well. And if I ever get to fixing and optimising H.264 decoder it should be good enough to serve as an everyday video player (I use nihav-sndplay to listen to my music collection already).

I guess there’s just one of the original ideas (of what I wanted to try at the time of public NihAV release) left that I haven’t tried yet, namely to experiment on writing some DCT-based video encoder with rate control and such (maybe even for VP6). This should keep me occupied for a couple of years. Or at least inspire me to do something else instead.

Revisiting legendary Q format

Sunday, May 30th, 2021Since I had nothing better to do I decided to look again at Q format and try to write a decoder for NihAV while at it.

It turns out there are three versions of the format are known: the one in Death Gate (version 3), the one in Shannara (version 4) and the one in Mission Control (not an adventure game this time; it’s version 5 obviously). Versions 4 and 5 differ only in minor details, the compression is the same. Version 3 uses the same principles but some coding details are different.

The main source of confusion was the fact that you have two context-dependent opcodes, namely 0xF9 and 0xFB. The first one either repeats the previous block several times or reuses motion vector from that block. The second one is even trickier. For version 5 frames and for version 4 with mode 7 signalled it signals a series of blocks with 3-16 colours in each. But for mode 6 it signals a series of blocks with the same type as the previous one but with some parameters changed. If it was preceded by a fill block, these blocks with have fill value. After a block with patterns you have a series of blocks with patterns reusing the same colours as the original block. For motion-compensated block you have the same kind of motion information transmitted.

But the weirdest thing IMO is the interlaced coding in version 5. For some reason (scalability? lower latency?) they decided to code frame in two part, so frame type 9 codes even rows of the frame and frame type 11 codes odd rows—and in this cases rows are four pixels high as it is one block height. That is definitely not something I was expecting.

All in all, the format turned out to be even weirder than I expected it to be.