A year ago I wrote a post about NihAV being conceptually done, now it’s even closer to perfection since I’ve finished writing a useful video player.

Writing it (and especially debugging it) was surprisingly hard so in most cases after looking at it I thought that I’d rather do something else—RE some game format, work on deflate support, just anything but that. But at last it’s done and working adequately. The previous player could just play video until it’s over (or deadlock occasionally), this one supports pausing and seeking plus it seems not to deadlock.

Conceptually it’s very simple as any other player concept, it’s just various features and limitations complicate the design. You simply open input, read packets, discard them or decode with a corresponding decoder and present the result (draw the frame or send audio to the sound card). Now what to do in order to make it offer all the features I wanted from it?

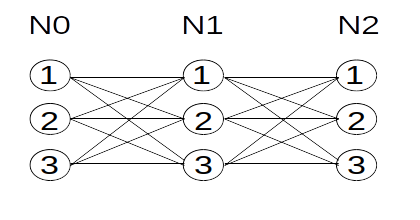

First, interactivity and low(er) response latency. The latter is easy to achieve, you just put audio and video decoding into separate threads so the main loop just does the demuxing and checking for user commands. So now you have user commands that might affect decoding threads (for example, seeking or going to play the next file). I implemented it by setting a special flag that decoding thread checks and discards all queued input packets until some command is received.

Another fun thing was volume control. In my audio player I simply queue samples and let SDL deal with them, here I switched to callback and fill the requested buffer with samples adjusted by current volume. This way you have volume change applied almost immediately instead of it taking effect in a second or more depending in audio queue fill.

And speaking about queues, that was also a fun thing to manage. In a default implementation (demux-send-repeat) you either end up sending all packets before the decoding thread processes them all (and this will consume a lot of memory) or you block on sending via a limited message channel (which either requires a separate thread with more complicated interaction between them all or you can forget about interactivity). The answer is obviously to keep check of how full the channel is and do not demux new packets unless you’re sure you can send them. I keep a queue for the packets or events that should be sent but there’s no space in communication channel for that yet. Additionally I make audio part report the current buffer fill so I know there’s no reason to send more packets yet. Similarly for video I keep a queue of ready to display frames (in SDL textures, so drawing them is just one SDL blit call).

Overall, nothing particularly tricky but debugging it was still not fun. I actually ended up adding a compile-time debugging feature that will dump a lot of internal playback information into debug.log so I can figure out what actually happened there (i.e. NIHing a logger but you should not be surprised by that).

Of course it still lacks a lot of features for a serious player like proper synchronisation (with automatic framedropping and audio underrun corrections), more features (like playback at different speed, taking screenshots and switching between different input streams) and GUI. But I’m fine with the current state of my player and maybe enhance it later if the need arises.

Fun thing is that my player seemed to stall on MP4s. As it turned out, the problem was in MP4 demuxer producing packets in interleaved order (one for audio stream, one for video stream, one for audio stream again, one for video stream…) while it should’ve output packets for various streams for more or less the same time position (which means usually two AAC frames between video frames instead of just one). After the change my player works as expected on MP4s as well. And if I ever get to fixing and optimising H.264 decoder it should be good enough to serve as an everyday video player (I use nihav-sndplay to listen to my music collection already).

I guess there’s just one of the original ideas (of what I wanted to try at the time of public NihAV release) left that I haven’t tried yet, namely to experiment on writing some DCT-based video encoder with rate control and such (maybe even for VP6). This should keep me occupied for a couple of years. Or at least inspire me to do something else instead.