Let’s look at last category of Dingo Pictures cartoons. Because it’s rather large I’ve split it into two parts.

Read the rest of this entry »

Dingo Pictures Works: Classics pt. 1

November 4th, 2017H.263 And MPEG-4 ASP—The Root of Some Evil

November 4th, 2017As you might know (but still not care), I’m working on adding full RealMedia support for NihAV starting with video. So I’ve made it to decoding RealVideo 2 and I have some not so nice words to say about H.263 and MPEG-4 ASP.

First, the creeping featuritis in the standards: MPEG-4 part 2 from 2001 has A-O (the version from 2004 has only annexes A-M for some reason) while ITU H.263 (version from 2005) has annexes A-X plus two appendices. For comparison, ITU H.264 from 2017 has annexes A-J, same for MPEG-4 part 10 😉 Mind you, some annexes are for informative stuff (e.g. how an encoder should work or list of patent claims) but others add new coding features. So, for MPEG-4 part 2 (2001) we have 15 annexes, 7 of them are informative and only a couple of normative annexes add new features. For ITU H.263 out of 24 annexes about 15 are introducing new coding modes and other enhancements (different treating of motion vectors, loop filter, an alternative macroblock coding mode, PB-frame type and a lot more). The features are actually grouped into baseline(-ish) H.263 and H.263+.

Second, neither of them is really suitable for video coding. I know, it might sound strange, but either of these standards makes an unholy mix of various codecs. H.263 mixes several codecs from different generations together (initial H.263 did not have B-frames, later they’ve added PB-frames and finally B-frames too, there are at least two different ways to code macroblocks etc etc), MPEG-4 part 2 is for coding 3D video that actually also specifies a method to code video texture on those 3D shapes (there are no actual frames there, just VOPs—Video Object Planes). And yet, because the compression methods there provided an improvement over H.262 (aka MPEG-2 Video), they were used in various forms with various hacks in many multimedia formats. There we have a very wide gamut from RealVideo 1 and Sorenson Spark (aka FLV1) with just I- and P-frames to Intel I.263 that had PB-frames to RealVideo 2 with many features of H.263+ (including B-frames) to M$ MPEG-4 decoders to WMV2.

And here we have the problem: both format grew from the joint effort known as H.262 or MPEG-2 Video so obviously it was a good idea to abuse the same decoder structure to handle all possible variations of H.263 and video texture coding from MPEG-4 part 2 and then add all decoder-specific hacks. And in result you get a mess that’s hard to comprehend because it usually depends on many various context variables set in a specific manner for a specific codec. Hence the post title.

To demonstrate this I’ll show how the same feature is handled in different H.263/MP4p2-based codecs.

Sequence and frame headers

Obviously it differs for every codec. Some rely on container-provided width and height, some have dimensions coded for GOP or for individual frames, some codecs have only meaningful bits in the frame header, others store all feature bits and error out on unsupported configurations.

Frame types

- Intel I.263: I, P, PB

- RealVideo 1: I, P

- RealVideo 2: I, P, B

- Sorenson Spark: I, P, droppable P

- WMV1: I, P

- WMV2: I, P, X8(alternative I-frame coding)

- H.263 in general: I, P, PB, B, EI, EP (last two are enhancement layer picture types for scalable coding)

- MPEG-4: I, P, B and S (last one is sprite-coded picture)

Block coding

- Intel I.263: H.263 codes

- RealVideo 1: H.263 codes with a special codes for I-frame DCs

- RealVideo 2: H.263+ AIC mode (advanced I-frame coding) plus H.263 P- or B-frames

- Sorenson Spark: H.263 codes with a custom handling of AC escapes

- WMV1/2: M$MPEG-4 codes

Motion vectors reconstruction

- H.263: simply add predictor vector

- H.263 UMV: depending on predictor value and difference range wrap it or not (see ITU H.263 D.2 for proper explanation)

- MPEG-4:

if (mv < low) mv += range; if (mv > high) mv -= range; - M$MPEG-4:

if (mv < = -64) mv += 64; if (mv >= 64) mv -= 64;

(And there are different ways to predict motion vectors too!)

There are even more quirks than I listed here but it should give you an idea what a fine mess these formats are and why the code that supports them all tends to turn into huge mess. I tried to solve it in NihAV by having a template decoder for H.263 that calls bitstream parser for actual codec-specific parsing and keep some quirks inside specific structures (like MV that adds vectors differently depending on current mode) I still have more features to take into account (like slices, AC prediction and B-frames) so I’ll have to redesign it before I can support RealVideo 2 properly.

But then maybe I’ll add Vivo Media format support for the old times sake (it’s the funniest one with codebooks stored as strings of ones and zeroes like “0000 0011 110” inside the binary with “End” signalling the codebook end).

Dingo Pictures Works: For The Youngest Ones

October 24th, 2017So, it’s time for spotlighting even more Dingo Pictures cartoon! And today we’re talking about the cartoons oriented at the youngest audience (even though all Dingo Pictures cartoons are rated as FSK 0—German version of Hays code saying “appropriate for ages 0 or older”—some of them are for more grown up audience, little children won’t be able to appreciate them).

Read the rest of this entry »

RMHD: A More Detailed Look

October 14th, 2017Two years ago I’ve made some predictions about upcoming RealMedia HD and little I knew that it was finished in 2015! So finally I’ve had a look at it and here are some details.

First of all, it seems to be China-oriented since the only version with RMHD support I was able to find (even from US site) was Chinese version of RealMedia player (stream in peace…). And since it’s China it bundles CEmpeg libraries with RMHD support built-in. Good luck obtaining the modified source though.

The actual decoder was in a separate library though with the usual interface for RealVideo decoders and obviously I could not resist looking inside.

It turns out that RMHD corresponds to RealVideo 11 or RV60. Either they thought it’s too advanced to be merely RV50 or NGV was intended to be RV50 but they’ve buried it in the same grave with MPEG-3 and such.

Anyway, it’s time for juicy technical details.

RV60 is based on ITU H.EVC or its draft. It is oriented on multi-threading decoding and they have a lot of crap cut out and thrown away and I fully approve that. It’s the problem with many standardised codecs: you have so much flexibility in configuring coding parameters that you have to invent special objects to signal coding parameters for the following group of frames unless you want to waste 10% of bitrate on them in every slice header; and then you invent profiles because not all of the features can be supported by existing hardware (for example, because they’ve not been added to the standard yet). RV60 has rather simple frame header and coding units are always size 64 and they seem to comprise all three planes instead of coding planes separately.

The biggest disappointment is motion compensation of course. RV2 had 1/2-pel MC, RV3 had 1/3-pel MC, RV4 had 1/4-pel MC. I obviously expected 1/5-pel MC for RV5 but instead they’ve stopped on 1/4-pel MC (with the bog standard 1 -5 20 20 -5 1 filter for luma and bilinear interpolation for chroma too).

Spatial (aka intra) prediction is very close to H.EVC as well.

Transforms are present in 4×4, 8×8 and 16×16 sizes that are some poor integer approximations of DCT.

And now for the juiciest part: coefficients coding! Coefficients are coded with with lots of context-adaptive codebooks, for intra/inter, luma/chroma and various quantisers. And since it’s RealVideo and not Thor (and its Norwegian developer does not seem to work any more on it) all codebooks are static (total over 32k entries) and stored in compact and obfuscated form. Compact means that the description has only code lengths packed into nibbles and obfuscated means they were XORed with a string containing name and mail of the guy who probably generated those codebooks (this reminds me a bit of Cineform HD and Sierra AGI resources) and it makes me shout “RealLy!? What were you trying to achieve with that?“. I’ll look closer at actual coefficient coding a bit later (or significantly later—when I have a desire to do so) but so far it looks like the coefficients are coded in 4×4 subblocks but in general following H.EVC coding scheme.

Deblocking seems to be present and depends on block size. SAO is not present it seems (and I don’t miss it either).

There seems to be only three frame types (no RADL-, WTFL- or AltGr-frames) but the frames may have multiple references.

Overall, my predictions turned out to be mostly true. I should be surprised, I guess.

I’m yet to see any real samples too but this makes it one of the best H.EVC-based codecs (better than actual H.EVC or VPix—because nobody cares about it) so there’s nothing much to complain about.

P.S. I’ve working RealVideo 1 decoder in NihAV already so maybe the first opensource decoder for RV60 will be in NihAV too.

Dingo Pictures Works: Adventures

October 3rd, 2017This category can be alternatively titled wild animal adventures and it contains probably the most famous Dingo Pictures cartoons.

Read the rest of this entry »

Dingo Pictures Works: Fairy Tales

September 22nd, 2017There are only three stories in this category and six-seven in the remaining ones so I don’t have to split this post into two parts.

Read the rest of this entry »

Dingo Pictures Works: Thrillers

September 11th, 2017Today I’m covering the great works from Dingo Pictures. I intend to split the review into roughly the same categories as they are put on the official website and today we start with the first section. Its name is “Krimis” in German which I think is more appropriately translated into “thriller” than “mystery story” or “detective story”.

Read the rest of this entry »

Dingo Pictures: Art Style

September 1st, 2017The prolific German animation studio has made 28 animated films during the 1990s and early 2000s and obviously they’ve managed to make their own unique style. In this post I’ll try to describe it.

Three Problems in Supporting Multimedia Formats

August 31st, 2017As you probably don’t care, I’m working on RealMedia demuxer for NihAV. And it’s very straightforward: chunks without nesting, version field to guard against surprises, clean layout. The only peculiarities it has are audio data interleavers and so-called logical stream (the special entry that describes how to select streams for streaming depending on bitrate available). And yet the implementation for this format in libavformat is quite complex and baffling. This observation led me to playing Captain Obvious and stating these three problems:

- Following specification. Unless it’s ISO/IEC or ITU codec you usually have quite lacking specification either with details omitted (or as DT$ representatives put it, but we have it in the SDK!. Which helps a lot when you can download only ETSI paper). In some case the original implementation is the only specification you have. I’m no stranger to working with binary specifications but it still quite often doesn’t say what to expect in some cases (and then fuzzing happens…);

- Supporting hacks and abuses of specification. Two examples: MP3 and AVI. Or MP3 in AVI. For instance, MP3 has an optional CRC field (so if you don’t want CRC you simply don’t put it there) but I’ve seen samples that put zero checksum instead. Or in AVI you’re supposed to have

42dbchunks for uncompressed video frames,42dcfor compressed video frames and42wbfor audio data. In reality you can havedcanddbidentifiers mixed in the same stream or even0041chunks put insideLIST recchunk (that’s Indeo 4.1 in CivII clips). And of course there are many many more examples that everybody who has encountered them tries to forget. - Seeking. You might wonder how seeking gets here and I’ll tell you how: most formats are not designed for random seeking and even if they are, users would still want to ignore indices, jump to a random position and find a start of the next chunk and timestamp as well. And in

libavformatthat is performed by a binary search that invokes specialread_timestampfunction of the demuxer (if present) which is supposed to do exactly that—searching for packet start and reporting a timestamp.

The moral of the story: if you can allow to ignore stupid user requests, do so and cherish the fact that you can. In NihAV I’m going to implement seeking only for formats that allow that (with reading index) or by more generic linear seeking that skips frames. This should be enough for my needs and it’ll keep code simple too.

Now that it’s become a bit colder I might actually resume my work on NihAV and even more important thing—describing Dingo Pictures art style and works.

A Bit About Legendary German Animation

August 5th, 2017If you talk about German films as a foreigner you might know some good ones like influential Fritz Lang movies (for Frau im Mond they’ve hired Hermann Oberth himself and as the result their depiction of space travel looks much more realistic than modern Hollywood flics) or Gojko Miti? adventure features (the Eastern Westerns). But if you’re not from Germany, what German cartoons do you know? Looks like the only German cartoons that got some widespread action are those from Dingo Pictures.

Dingo Pictures is a company located in Taunus that has produced about thirty Hess(l)ich cartoons in the second half of 1990s. Some of those were completely unique and some were ripped off by D*sney and Don Bluth earlier.

Dingo Pictures has their own unique and easily recognizable style. But before I explain it, here are the eponymous animals in one of their cartoons:

So, Dingo Pictures was a pioneer, combining computer drawn animation with 2D drawn background (watercolours no less!). Also like the anime father, Osamu Tezuka, the company had a cast of actors always appearing in every cartoon.

For example:

The Bear (he often changes scenes and complains about everything)

The Bear (he often changes scenes and complains about everything)

And here’s the star of all Dingo Pictures cartoons, the one and only Wabuu:

Wabuu!

Wabuu!

In case you didn’t know, Wabuu was so popular that he had his own original cartoon, title song (that can be heard in several other cartoons too) and even audio books! Even now the DVD with his own cartoon costs at least twenty Euro on Amazon and about ten Euro used (for comparison, you can buy almost any other used DVD with Dingo Pictures cartoon for one eurocent).

Anyway, we were talking about the style. It’s hard to express in words what makes Dingo Pictures cartoons so charming but I think phrases “record-mending animation quality”, “copy-pasting everything”, “reusing the same scenes in other cartoons”, “more padding that Star Trek The Motionless Picture” and “dull voice acting” would do.

Here are some shots from one of their longer films, King of the Animals (or Lion King for short), don’t mind the quality, I tried to be lazy:

Title card. One of the best ones, honestly.

Title card. One of the best ones, honestly.

Every animal is uniquely redrawn.

Every animal is uniquely redrawn.

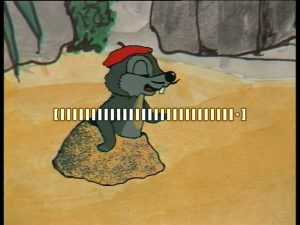

One of moles (as you can guess from its look this mole is Italian).

One of moles (as you can guess from its look this mole is Italian).

The story is very typical: the lion rules the jungle full with monkeys, hippos, crocodiles and vultures (and with hares, squirrels and bears—the bear picture above is from this cartoon). One day a monkey finds diamonds but they decide not to mine them in fear of humans coming. With the birth of his son, the lion king loses interest in ruling and the black panther seizes the power with cheating and false promises and exiles the king. Later, with the help of snake, vultures and bear the panther is defeated. If you think you’ve heard this story elsewhere, don’t worry—it has unexpected twists in it.

And in the end we have scenes like this:

The vultures asked for a computer with phone and modem for their help.

The vultures asked for a computer with phone and modem for their help.

The Black Panther is defeated!

The Black Panther is defeated!

BTW the snake enjoys reading books and quotes Shakespeare, Goethe, Karl Marx and Gorbachov. If you find this strange how could you get past the fact the panther’s name is Bocassa?

How one can not enjoy masterpieces like this! Oh, and every time I hear sirens I remember the duck from Animal Football, that’s how much their art has touched me.

There’s a sequel to it, simply called King of the Animals The Second Part but it’s of lesser quality IMO.

The other noteworthy cartoons are Aladdin(the genie there is a famous actor who is not Robin Williams), Animals Football(there they’ve copy-pasted all their animal characters and then some), Bremen Musicians(it has live narrator filmed, not just animation), The Case for Mouse Police(it simply needs to be seen to be believed) and of course Wabuu.

P.S. For some reason DVDs are distributed by P*wer Channel GmbH and don’t mention the original creator anywhere except in the video. They are that modest.

P.P.S. Honestly, I don’t think I’ve heard about any other German cartoons. But these cartoons have reviews in BaidUTube channels of people from countries like Canada and Sweden (the latter is in Swedish of course, actually Wabuu song sounds even better in Swedish than in German).

Goldie (named so after the Austrian book Bambi) and Wuschel (the squirrel), Ringo the Hare is not pictured here

Goldie (named so after the Austrian book Bambi) and Wuschel (the squirrel), Ringo the Hare is not pictured here The titular king.

The titular king. Nice backgrounds.

Nice backgrounds.