First of all, I want to warn you that “optimisation” part of RDO comes from mathematics with its meaning being selecting an element which satisfies certain criteria the best. Normally we talk about optimisation as a way for code to run faster but the term has more general meaning and here’s one of such cases.

Anyway, while there is a lot of theory behind it, the concepts are quite simple (see this description from a RAD guy for a short concise explanation). To put it oversimplified, rate control is the part of an encoder that makes it output stream with the certain parameters (i.e. certain average bitrate, limited maximum frame size and such) and RDO is a way to adjust encoded stream by deciding how much you want to trade bits for quality in this particular case.

For example, if you want to decide which kind of macroblock you want to encode (intra or several kinds of inter) you calculate how much the coded blocks differ from the original one (that’s distortion) and add the cost of coding those blocks (aka rate) multiplied by lambda (which is our weight parameter that tells how much to prefer rate over distortion or vice versa). So you want to increase bitrate? Decrease lambda so fidelity matters more. You want to decrease frame size? Increase lambda so bits are more important. From mathematical point of view the problem is solved, from implementation point of view that’s where the actual problems start.

Let’s start with the rate control. The idea is rather simple: you have bit budget that you need to spend on frames without undershooting or overshooting it much. So at first I calculate the amount of bits that should be spend on the current frame (and multiply it by three for intra frame since coding intra frame takes more bits), estimate the appropriate quantiser and, after the frame has been encoded, update the bit budget and adjust lambda depending on how the predicted and actual frame sizes mismatch.

And here are the things I ignored in my encoder: what should be the ratio between intra and inter frame sizes? by how much to adjust lambda when the predicted and actual frame sizes are too different? should the quantiser be adjusted in addition to lambda? should I actually search and read the papers dedicated to this problem?

My current scheme is simple: I encoded various content at all possible quantisers and gathered statistics on how much an average intra or inter MB takes space with the given quantiser (and in bool coder or Huffman mode). Add the average frame header (also dependent on the quantiser and inter/intra) and you can predict frame size at a given quantiser. And of course since frame size depends on the content coded, that prediction is not always correct and I adjust lambda. If the predicted size by 10% or more larger that the actual one, I decrease lambda by 1/32 (initially it’s one since the metric I chose for size seem to give numbers of similar magnitude as the sum of absolute differences that I use for distortion). Similarly if the projected size is significantly smaller than the actual one, I increase lambda by 1/32.

As you can see, there are many things that can be improved here but I’m satisfied with just this simple scheme. So let’s move to size estimation and RDO.

I mentioned previously, in the post about bool coder, that I use 1/8th of a bit as a metric for size estimation. I did it because the amount of nits (as I named them) required to code a probability can be fit into a byte and it gives good enough resolution. So I ended up using it in other parts of the code that involve calculating coded size.

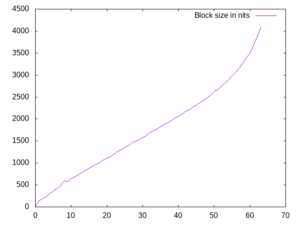

Unfortunately you can’t calculate the size of the coded macroblock directly (for RDO-based decisions) since each frame uses static probabilities and you need to calculate statistics for the whole frame and only then you can find out how much the current macroblock will take. So I took the next best thing—guesstimates! As with frame sizes, I encoded a significant amount of various content with all possible quantisers and calculated an average of what it takes to encode a block with N non-zero coefficients.

Since I suck at mathematics, I can’t recognize the curve and what equation describes it (I have a suspicion that it can be approximated by something like ax^0.9+bx^1.2+c but I don’t know how to estimate those powers and coefficients and I don’t want to repeat that process for other quantisers and generalise the formula for all of them). That’s why I simply store a table of average values for the block for all 65 possible non-zero coefficient counts for 16 quantisers (3, 7, 11, … 63).

Probably the proper way would be to maintain statistics and update it based on the actual encoded data but I do not want to waste much time doing that. After all, the idea was to try the concepts and see how they work and not more than that. For the same reason I use static estimates for the macroblock data without applying DC and MV prediction.

In theory one can also process several frames in advance to see what statistics they have, split the portion of bit budget between them according to that and maybe repeat encoding of the same frame with different parameters until the target size is achieved.

As you can see, there is a lot room for improvement here, both for a general framework and for codec-specific bits. But since I prefer tinkering to the fine-tuned adjustments, I’m happy with what I’ve got so far.

[…] type selection process is simple: rate control module keeps lambda value for RDO decision (see my previous post for more details) so the best between intra MB, inter MB referencing previous frame and inter MB […]